07 - Text Extraction and OCR

Extracting text and transforming it to qualitative data is challenging when you have the output format (PDF or image) and need to reduce it to the source format of textual data.

Extracting text from files is a fragile process if you don’t know what to expect from the format of the input files. The Java-based Apache Tika can handle hundreds of file extensions, has a Python port and is your best option. If you are looking for other (Python) packages to extract text, always check the underlying required packages.

However, Tika-outputs are often not perfect. Especially the PDF format is a challenging one. PDF was designed as an output format, resulting in a perfectly readable document, but is difficult to reduce the file back to the source text. Especially the broken lines for PDFs are difficult to clean. Should you remove the line-end to restore the sentence, or does this has the effect that you just merged a title with no ending dot with another sentence. Also PDFs with multiple columns on a page are often wrongly parsed.

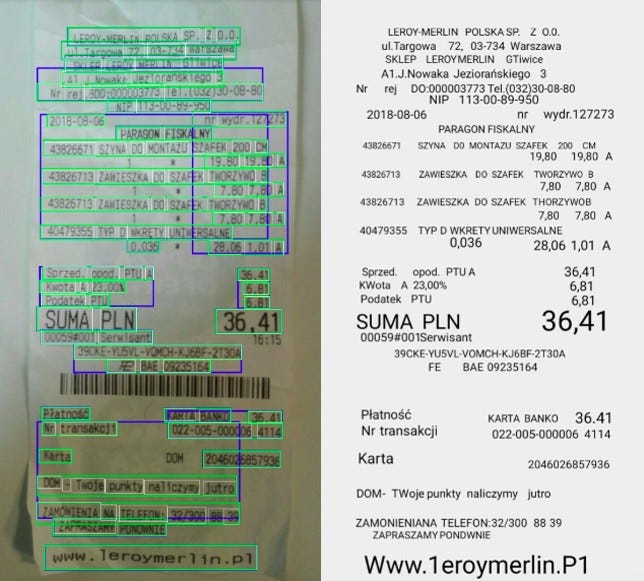

OCR on a Polish receipt (source)

Older PDFs do have extra challenges, because they may contain images of text and not digital text. For this an Optical Character Recognition (OCR) model is needed that is trained for your language and Font type. It has a lot of subtasks like adjusting the scan to the right angle, converting the image from color to black and white, smoothing edges, normalizing the aspect ratio and analyzing the layout for zones like columns, paragraphs or tables. Widely used libraries are OpenCV and Tesseract-OCR which was started decades ago by HP and is now maintained by Google. There is also a Python wrapper.

This article is part of the project Periodic Table of NLP Tasks. Click to read more about the making of the Periodic Table and the project to systemize NLP tasks.