43 - Training Models

Training Language Models should start with a simple baseline and be improved with more complex techniques.

Training NLP models is a broad topic. It’s best to start light and improve later. You can start by building a rulebased model for two hours and experience how good it scores. Take this as a baseline score. Then try to improve this with a simple technique like a regression model. If you want to elaborate further, try training a deeplearning model.

The more complex your model, the longer the training time. More performance requires better hardware. Instead of CPU you might need GPU’s or TPU’s.

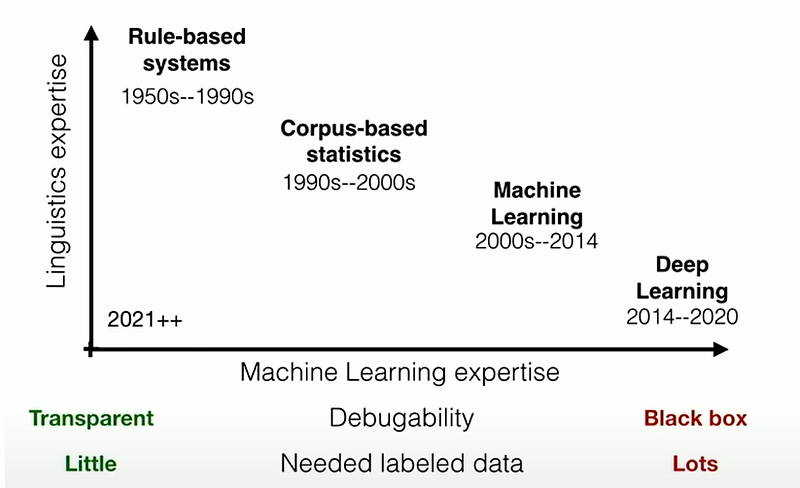

Yoav Goldberg talked about the required expertise to build NLP models. His vision is that in future (2021+) humans don’t require much ML or linguistic expertise. Humans will be writing rules, aided by ML/DL, resulting in transparent and debuggable models.

This article is part of the project Periodic Table of NLP Tasks. Click to read more about the making of the Periodic Table and the project to systemize NLP tasks.