45 - Explaining Models

Explaining the outcomes of your Language Model is needed to prevent distrust and increase transparency.

The business will require that your model can explain its outcomes. Transparency is needed to prevent distrust. For example, to prevent racial bias in sentiment analysis.

ELI5 (Explain Like I’m 5) is a python package which helps to debug machine learning classifiers and explain their predictions. It supports several Machine Learning frameworks, like Scikit-Learn and Keras.

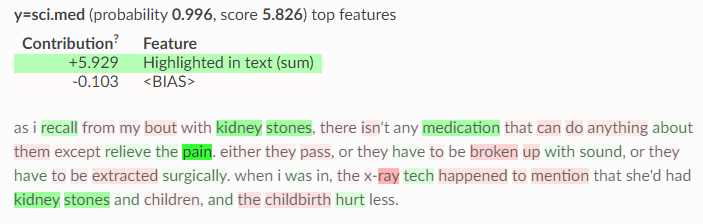

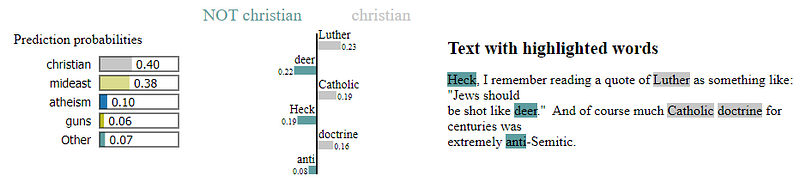

LIME (Local Interpretable Model-Agnostic Explanations) explains individual predictions for text classifiers:

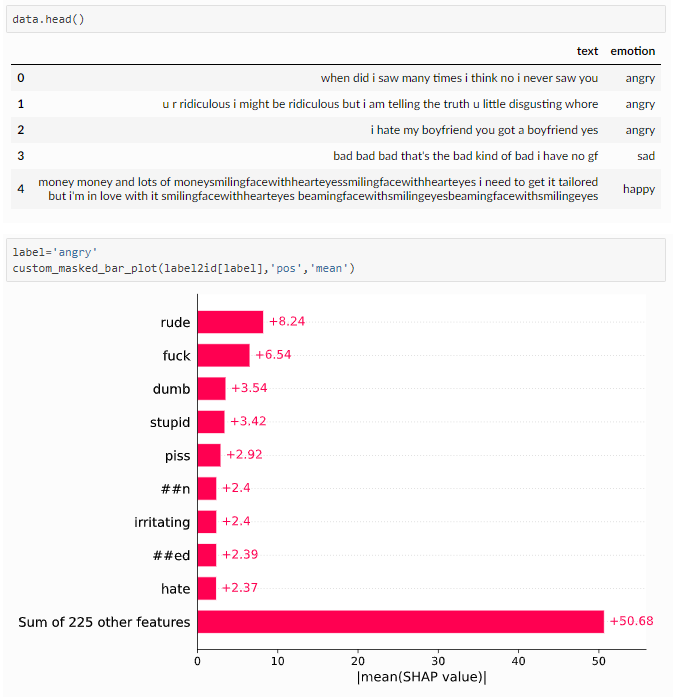

SHAP (SHapley Additive exPlanations) is a game theoretic approach to explain the output of any machine learning model:

This article is part of the project Periodic Table of NLP Tasks. Click to read more about the making of the Periodic Table and the project to systemize NLP tasks.