70 - Question Answering

Answering questions posed by humans in a natural language, regardless of the format of the question.

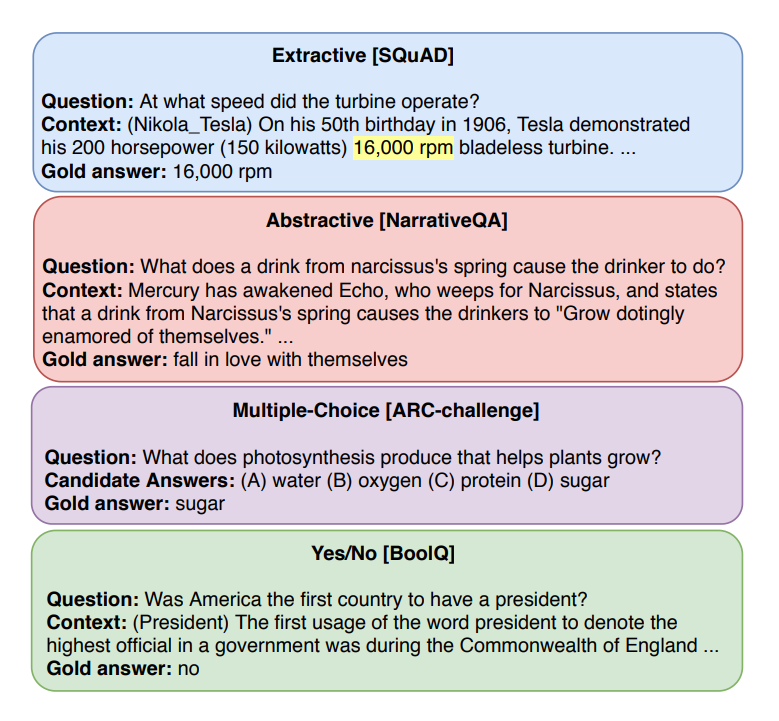

Question Answering is the task of automatically answer questions posed by humans in a natural language. There are different settings to answer a question, like abstractive, extractive, boolean and multiple-choice QA.

Extractive QA has the goal to extract a substring from the reference text. Abstractive QA has the goal to generate an answer based on the reference text, but might not be a substring of the reference text. Boolean questions are Yes-No answers. Multiple choice questions have several options to choose from.

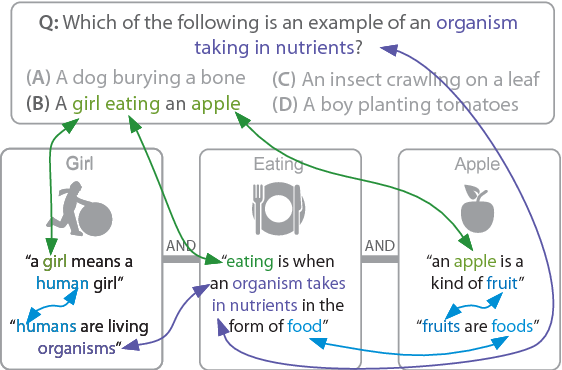

A variant to the regular question-answer is Multi-hop question answering which requires a model to gather information from different parts of a text to answer a question.

A special feature of a QA system is the option to not answer a question or answer ‘idk’ (i don’t know) . An example is SQuaD. SQuaD 1.0 QA training data set was created as reference texts with questions that always were answered. The improved SQuaD 2.0 dataset was supplemented with questions that could not be answered.

As shown, different researchers treat different formats as distinct problems. But AllenAI made UnifiedQA, which is a T5 (Text-to-Text Transfer Transformer) model that was trained on all types of QA-formats. You can try their demo.

Another variant is where there is no reference text that serves the question. The required knowledge has to come from within the model itself. The knowledge is stored in the models parameters that it picked up during unsupervised pre-training. You can give this demo a try.

This article is part of the project Periodic Table of NLP Tasks. Click to read more about the making of the Periodic Table and the project to systemize NLP tasks.