63 - Next Token Prediction

Predicting the next word that is appropriate in the context.

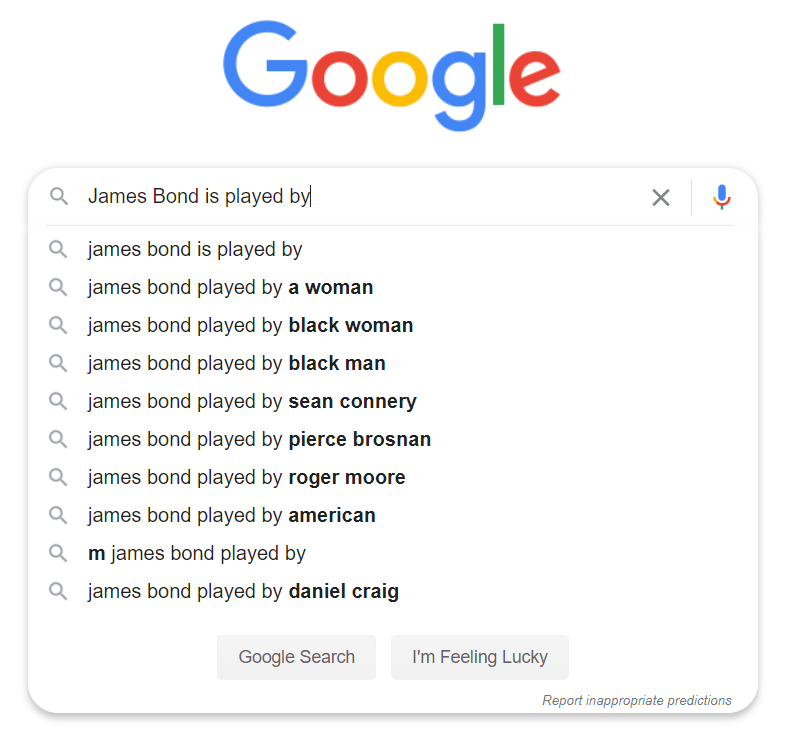

Can I help you by predicting the next word you will type? A lot of apps are using this auto-complete feature to please the user.

N-gram language models can be used as a simple solution for Next Token prediction. It assigns the probability to a sequence of words, in a way that more likely sequences receive higher scores. For example, ‘I have a pen‘ is expected to have a higher probability than ‘I am a pen’ since the first one seems to be a more natural sentence in the real world.

Long Short Term Memory (LSTM) is a more advanced approach. It will better understand the context and has a better performance, because not all N-grams have to be calculated.

This article is part of the project Periodic Table of NLP Tasks. Click to read more about the making of the Periodic Table and the project to systemize NLP tasks.